Alibaba has launched the foundational model Qwen 2.5, along with Qwen 2.5-Coder specialized for coding and Qwen 2.5-Math for mathematics. This trio of models features over 10 versions in total, and Qwen 2.5 has surpassed the Llama-3.1 instruction-tuned model in several benchmark tests, with a significant increase in pre-training data reaching 18 trillion tokens.

In the early hours today, Alibaba officially announced the largest open source release in its history, introducing the foundational model Qwen 2.5, along with the coding-focused Qwen 2.5-Coder and the math-oriented Qwen 2.5-Math.

These three categories of models comprise over 10 versions, including 0.5B, 1.5B, 3B, 7B, 14B, 32B, and 72B, suitable for individuals, enterprises, and various business scenarios across mobile and PC platforms.

For those looking to avoid cumbersome deployment, Alibaba has also opened APIs for its flagship models Qwen-Plus and Qwen-Turbo, helping you quickly develop or integrate generative AI features.

According to a report on September 19 in Hangzhou, Alibaba Cloud launched the world’s strongest open source large model, Qwen 2.5-72B, which significantly outperforms Llama 3.1-405B, reclaiming the title of the world's top open source large model.

Additionally, a host of Qwen 2.5 series models has been made open source, including the language model Qwen 2.5, the vision-language model Qwen 2-VL-72B, the coding model Qwen 2.5-Coder, and the math model Qwen 2.5-Math, totaling over 100 models. Some of their performances even surpass GPT-4o, setting new world records.

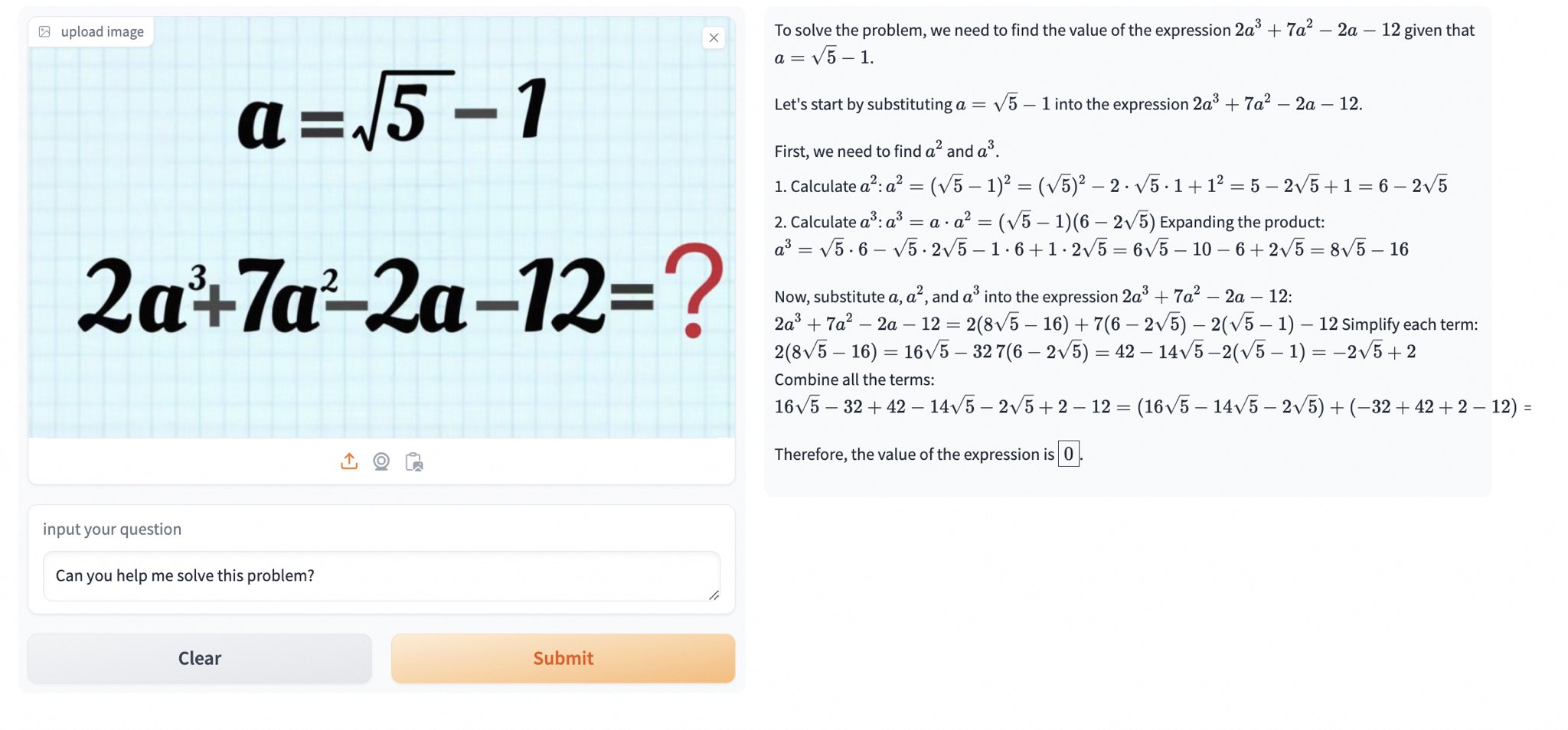

Qwen 2.5 has sparked a wave of discussion on social media both domestically and internationally. For example, the Qwen 2.5-Math model, which combines visual recognition capabilities, quickly identifies the content of a math problem from a screenshot, providing correct solutions and processes with impressive accuracy and speed.

1. Reclaiming the Global Top Spot: Qwen 2.5 Surpasses Llama 3.1-405B

Let’s take a closer look at Qwen 2.5’s performance. The Qwen 2.5 model supports a context length of up to 128K, can generate content of up to 8K, and supports over 29 languages, making it capable of assisting users in writing lengthy articles.

Moreover, pre-trained on 18 trillion tokens of data, Qwen 2.5 shows an overall performance improvement of over 18% compared to Qwen 2, possessing greater knowledge and stronger programming and mathematical capabilities. The flagship Qwen 2.5-72B model has achieved scores of 86.8, 88.2, and 83.1 on the MMLU-rudex benchmark (general knowledge), MBPP benchmark (coding ability), and MATH benchmark (mathematics ability), respectively.

The 720 billion parameter Qwen 2.5 has even "crossed a magnitude" to surpass the 405 billion parameter Llama 3.1-405B. Llama 3.1-405B, released by Meta in July 2024, matched or surpassed the then-SOTA model GPT-4o in over 150 benchmark tests, leading to the conclusion that "the strongest open source model is the strongest model."

The instruction-following version Qwen 2.5-72B-Instruct has outperformed Llama 3.1-405B in authoritative evaluations such as MMLU-redux, MATH, MBPP, LiveCodeBench, Arena-Hard, AlignBench, MT-Bench, and MultiPL-E.

2. The Birth of the Largest Model Family Ever: Over 100 Open Source Models Released

The number of open source models under Qwen 2.5 is unprecedented. Alibaba Cloud's CTO, Zhou Jingren, announced at the Yunqi Conference that the Qwen 2.5 series has released over 100 open source models, fully catering to the needs of developers and small to medium-sized enterprises.

Language Models: Ranging from 0.5B to 72B across seven sizes, covering all scenarios from edge to industrial-level applications.

The Qwen 2.5 series has released seven sizes of language models, including 0.5B, 1.5B, 3B, 7B, 14B, 32B, and 72B, achieving SOTA performance in their respective tracks.

3. Tongyi Qianwen Downloads Exceed 40 Million, Over 50,000 Derivative Models Created

After a year and a half of rapid growth, Tongyi Qianwen has become the second largest global model family after Llama. Zhou Jingren revealed two sets of recent data to support this:

First, the model download volume has exceeded 40 million as of early September 2024, reflecting developers' and SMEs' strong interest.

Second, the number of derivative models has surpassed 50,000, placing Tongyi's original and derivative models just behind Llama.

Follow us

Find us on Twitter, Instagram, YouTube, and TikTok for frequent updates on all things investing.

Have a financial topic you would like to discuss? Head over to the uSMART Community to share your thoughts and insights about the market! Click the picture below to download and explore uSMART app!

Important Notice and Disclaimer:

We have based this article on our internal research and information available to the public from sources we believe to be reliable. While we have taken all reasonable care in preparing this article, we do not represent the information contained in this article is accurate or complete and we accept no responsibility for errors of fact or for any opinion expressed in this article. Opinions, projections and estimates reflect our assessments as of the article date and are subject to change. We have no obligation to notify you or anyone of any such change. You must make your own independent judgment with respect to any matter contained in this article. Neither we or our respective directors, officers or employees will be responsible for any losses or damages which any person may suffer or incur as a result of relying upon anything stated or omitted from this article.

This document should not be construed in any jurisdiction as constituting an offer, solicitation, recommendation, inducement, endorsement, opinion, or guarantee to purchase, sell, or trade any securities, financial products, or instruments or to engage in any investment or any transaction of any kind, nor is there any intention to solicit or invite the purchase or sale of any securities.

The value of these securities and the income from them may fall or rise. Your investment is subject to investment risk, including loss of income and capital invested. Past performance figures as well as any projection or forecast used in this article is not indicative of its future performance.

This advertisement has not been reviewed by the Monetary Authority of Singapore

Singapore

Singapore Hongkong

Hongkong Global

Global Group

Group